About a month ago I got a chance to try the HTC/Valve Vive. It is completely amazing. Room scale VR is super awesome and half of the reason for that is input. The controllers themselves are so-so. I'm not yet totally sold on these funky touchpad wiimote things. But having any sort of three dimensional input solution for VR worlds is no longer a nice to have, it's a requirement. The difference between a keyboard/mouse and high precision tracking of head/hands is roughly the same as the difference between an LCD monitor and a HMD. The Vive really seems like the full package we need for consumer grade VR. I want one, and I want one now. I tried the Phillip J. Fry approach - but it was not very effective.

After realizing it was going to take more than half an hour to get my hands on a Vive setup of my own I quickly set out to create an intermediary setup that I could use to test with until I get my hands on the real thing. I'm using a Kinect v2 to do the positional tracking of my head and hands, letting the rift do rotation, and using an xbox 360 controller in each hand for buttons. This setup will cost you a few hundred dollars for a solution that maybe a dozen developers will support and will be irrelevant in six months. So if you're a consumer this is not for you. If you're a developer looking to start working on concepts for the Vive before you can actually get one - here's what you'll need to make one yourself:

Parts List

- Oculus Rift

- Kinect v2

- USB 3.0, a 64bit processor, and Windows 8 are required for the Kinect v2

- Kinect adapter for Windows

- Two Xbox 360 controllers (ps3 / ps4 / xbox one should work too)

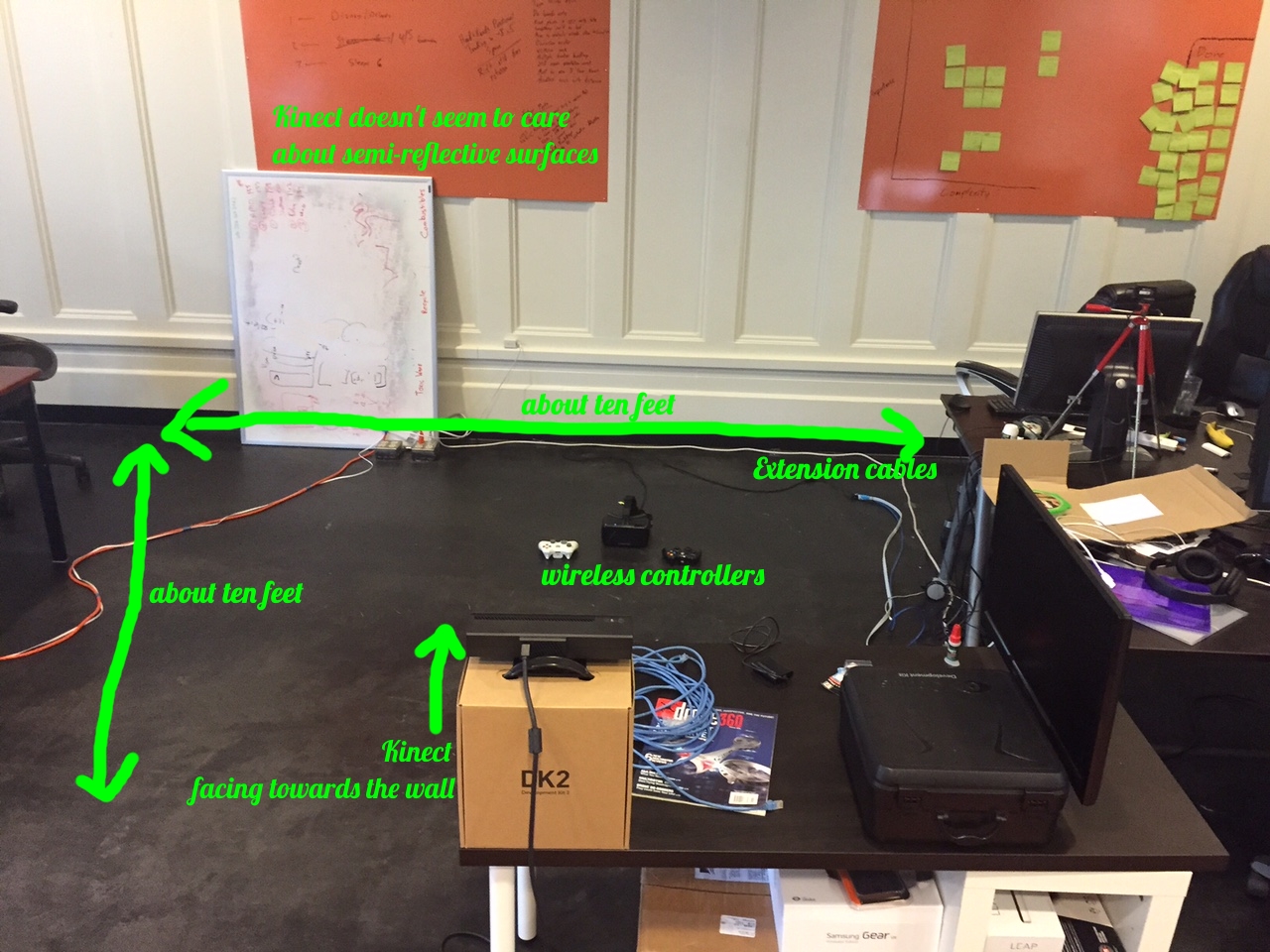

- Roughly a 10'x10' space

Software List

- Oculus Rift Runtime for Windows

- Kinect for Windows SDK 2.0

- Xbox 360 wireless controller software (may need to install in compatibility mode)

- Unity 5 (Unity 4 will work too, you'll just need pro for the kinect plugin)

Kinect Setup

I recommend clearing out a roughly 10 foot by 10 foot space. I'm currently working at a rad VR startup and we have the room but if your space is a bit smaller that's okay. Just keep in mind that the bigger space you have the more awesome it's going to be. The Kinect has a minimum range of about 2 feet and maximum of about 12. The tracking does get a little janky at either extreme.

It's also a good idea to have a solid wall as the backdrop. At first I had it pointed at my computer desk and was getting a lot of jitter. Once I moved it so it was pointed at a wall the jitter significantly decreased.

Place the Kinect at about waist height to get the best accuracy at the widest variety of ranges.

You can easily check to see what the range of the Kinect's tracking is by opening up Kinect Studio v2.0 which should have installed with the Kinect for Windows SDK. (Mine's at C:\Program Files\Microsoft SDKs\Kinect\v2.0_1409\Tools\KinectStudio)

Computer Location

Placing your computer at the far reach of your Kinect seems to work the best for me. That way the cord for the DK2 is usually behind or to one side of me instead of coming over my shoulder. Ideally, we'd install some sort of cable boom solution so we never trip over the cord. But for now this is ok.

Known Issues

The Kinect is an amazing piece of technology, but optical tracking is not ideal for VR. The main issue is called occlusion. If you're facing away from the camera, then it can't see your hands, and doesn't know where they are. This would be a bigger issue if we were trying to use this for consumer grade VR, but for early development it doesn't cause too many problems.

It's hard to tell which avatar has the headset on. I've had the best luck with attaching it to the first avatar the kinect picks up and sticking with that until it loses tracking. Then reassigning to the next avatar that's closest to the camera. That seems to account for most of the scenarios I run into.

The version of InControl included with this package is the free version which is a bit old now. It's throwing an error for me about having multiple XInput plugins (which I don't see). That error doesn't seem to be causing any actual problems though, so I'm ignoring it for now. You also have to have the game window selected for InControl to recognize controller input. (Had fun troubleshooting this while making the gifs!)

Rotation doesn't work real well. Trying to parse out joint rotation from depth data is not a trivial problem. The Kinect does pretty well at it given the data it gets, but it is not super reliable.

The Kinect is built to do motion tracking - not position tracking. So you're going to get a bit of jitter trying to do positional tracking with it. The common solution to this problem is to just average out the location over multiple frames. This has a latency cost, but you get the benefit of not vomiting all over your equipment.

Unity Quickstart Template

I've taken the Oculus SDK, the Kinect V2 SDK, and an input mapper called InControl (which is awesome) and edited them a bit to create a template to easily get started on new projects. The Kinect V2 Unity plugin is (as of this posting) in the very early days of development. So I've made some improvements and added some functionality that has been common to most of the prototyping I've been doing.

- Tracks the first avatar who enters the space and assigns the OVR headset to them. When tracking is lost on that avatar it is reassigned to the next closest avatar (KinectManager.Instance.CurrentAvatar)

- Stubs for avatar initialization and destruction (KinectAvatar.Initialize / KinectAvatar.Kill)

- Kinect joint to Unity transform mapping (KinectAvatar.JointMapping)

- Common joint transform shortcuts (KinectAvatar.LeftHand, .RightHand, .Head)

- A shortcut for getting a Ray for where the user is pointing (KinectAvatar.GetHandRay)

- Position / Rotation smoothing. Higher numbers means faster (KinectAvatar.PositionSmoothing, .RotationSmoothing)

- Avatar Scale (KinectAvatar.Scale)

- "Chaperone" type wall warning system (WallChecker.cs / Walls.prefab)

- Recenter OVR camera with Start button (look at the kinect camera when hitting this)

Template Example

In the template I've included an example scene that lets you do simple building with cubes, color them, and shoot them. You can use one or two controllers, they both do the same things right now.

- Start - Recenters OVR camera

- Bumper - Hold to aim, release to shoot a bullet that adds a rigidbody to blocks you've created

- Trigger - Hold inside a block to move. Press outside a block to create. Release to place.

- Stick - Press down to bring up color menu. Move stick to select color. I took this concept (poorly) from the absolutely amazing TiltBrush.

Warning!

Set up your bounds first or you will run into things! I included WallChecker.cs with this package that I use to do this. It will fade in all the walls when you get close to one to account for backing into things. But you have to manually position the walls to match your space. This could technically be accomplished with the Kinect using the raw depth data but I haven't had a chance to do it.

Download

I've got the project up on GitHub here: https://github.com/zite/ViveEmuTemplate

Let me know if you have any questions or issues with this setup and I'll do my best to help. Also shoot me a message here or on twitter (@zite00) if you end up building something cool! This is quite a lot of fun to play with and has definitely made me a believer in room scale vr.

COMMENTS